Space, Nuclear Power, New Worlds, and LLMs

The Unserious Economics of an AGI Revolution

Let’s talk about revolution—the industrial kind.

I am not an economist, but I understand systems. When one thing changes, everything around it, from people to processes, changes to adapt.

We keep hearing about the impending moment when humanity achieves Artificial General Intelligence (AGI) and Artificial Superintelligence (ASI), entering a period of prosperity and enlightenment devoid of poverty and disease.

However, humanity has been steadily progressing in economic growth, cultural enrichment, and advances in global health for millennia without AGI or ASI. Anticipating the continuation of that progress is not much of a prediction.

Several LLM advocates may argue this time is different because we have not seen anything like what is coming, begging the question of how much they have learned about technological progress in the past 60 years.

Spend a few hours learning about the last industrial revolution - the digital one in the 1960s - and it becomes clear that AGI and ASI would only create evolutionary automation plays in the long wake of those revolutionary waves.

I’ll explain.

Large Economic Models

Large Language Models (LLM) are AGI’s current favorite building block, and they excel in predicting the next word in a sentence.

They can be used in isolation or as the foundation for applications such as OpenAI’s ChatGPT or Anthropic’s Claude.

These enhanced LLMs can go beyond predicting words, having the ability to infer and follow steps from written instructions. On a side note, I think Apple’s recent paper on limitations of LLM reasoning failed to explore that aspect, though I still agree machine reasoning is far from being achieved.

LLMs are impressive feats of engineering in either isolated or combined forms, but industrial revolutions require dramatic changes in industrial processes, which in turn require new forms of thinking.

That is where LLM-based technologies fall short.

Artificial or natural, language processing is neither novel nor scarce. A new industrial revolution is not being held at bay by the lack of people or machines that can process text.

Machines generating emails or scientific slop are more likely to produce heat than value.

Before we can predict the revolutionary impact of AGI and ASI technologies in our economic systems, we must understand the structure beneath those systems.

While major economic theories may have been proven flawed in light of new developments—and I may be proven very wrong in the future—those theories were still directionally accurate.

For example, the labor theories of value prevalent in the 18th century anchored the value of goods to the number of hours spent producing those goods. Those theories were laid to waste during the 2nd industrial revolution, but engineering effort (which can be measured in hours) and energy utilization (a direct measure of work) still play a significant role in determining the value of goods.

On the opposite side of still-relevant theories, revolutions create new lines of sight to previously impossible economic concepts. A great example is the creation of the Internet, which made network effects possible and supported the creation of the platform economy.

Either through old or new theories, the problem for AI revolutionaries is not one of predicting the future but one of understanding the economics of why society may not always value technological advancement above all else.

If we want a perspective on the true meaning of the word “unprecedented,” we need a brief history recap.

Liftoff - 1960-1979 - Where No Human Had Gone Before

In the 1960s, humanity witnessed the emergence of large-scale technological magic for the first time. Governments were pulling gigawatts of energy from rocks and corporations were modeling worlds using tiny bricks of processed sand.

Microchip-based computer systems guided us through the most important mission in history. Humanity was racing chariots with the gods, and from 1969 to 1972, the Moon became Earth’s backyard.

By 1971, Russian landers and probes had touched Mars and Venus.

Nuclear technology threatened the world but was also on track to replace fossil fuels for industrial applications.

When combined, nuclear-powered probes and microchips opened the doors for deep space exploration.

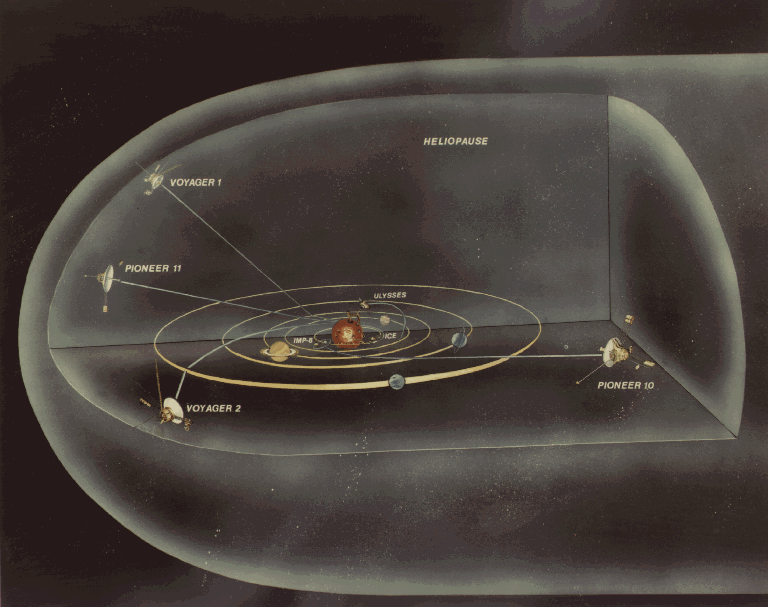

In 1977, humanity started its journey toward the outer reaches of our solar system with concrete plans for making first contact with interstellar civilizations.

|

|---|

| Credit: NASA/JPL-Caltech - Voyager Illustrations |

From the software side, we created programming languages and operating systems that power the core of the business world to this day.

In the ensuing decades, our popular culture pointed at flying cars and traveling to the stars.

From the infinitesimal to the infinite, there were no limits.

Between war and peace, our world would never be the same.

Ascent - 1980-1999 - The Internet Booster Burn

Deploying new reactors was expensive and time-consuming; by 1980, nuclear energy amounted to a mere 2% of global energy production. As a result, the world economy entered the 1980s reeling in the aftermath of the 1979 oil embargo.

But no matter, the 1980s also gave us the birth of the Internet (1983), IBM PCs (1981), Microsoft Windows (1985,) and even the first seeds of quantum computing technology.

The 1990s gave us the world-wide web and Linux (1993). Later in the decade, we also had the first commercial Bluetooth and WiFi devices.

Personal computers, wireless connectivity, a planet-wide network, and a (then) small company named Google brought it all together.

By the end of the decade, humankind took its second giant leap.

We were no longer connected virtually through a shared experience. We were connected, full stop.

We transitioned from making history to making stories. Moon landings and dreams of space colonization became distant memories. We became an old couple watching family photos, filling the void by looking inward.

Without communication floodgates between industry and consumers, the Internet fueled unrelenting economic growth, punctuated by Alan Greenspan’s “irrational exuberance” speech in 1996.

Our space dreams gave way to a pragmatism of space stations and low-orbit flights. We ramped up the Space Shuttle Program with its first crewed flight in 1981 and launched the International Space Station program in 1993.

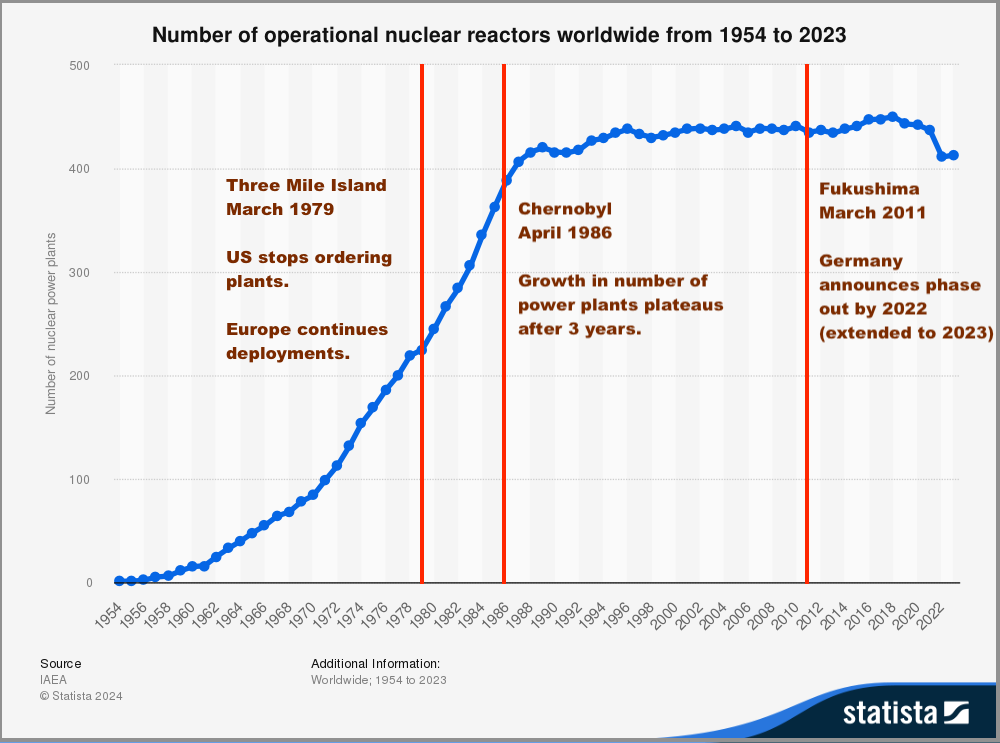

On the energy front, the world grappled with the Chernobyl explosion (April of 1986), raising the specter of mass contamination of the entire European continent and reviving the public anxiety about the safety of nuclear power plants.

Protests led to moratoriums and immediate halts in orders for new reactors.

Installed capacity continued to grow but at a much slower pace, and decades of breathless growth in nuclear output came to an end.

|

|---|

| Source: Statista’s “Number of operational nuclear reactors worldwide from 1954 to 2023” |

The possibilities were still infinite, but we seemed content with rediscovering the world from our living rooms.

Reentry - 2000-2012 - Flight Turbulence and Hard Landing

Those years were marked by higher highs and lower lows.

The early 2000s were a nightmare on all fronts, from economy to technology.

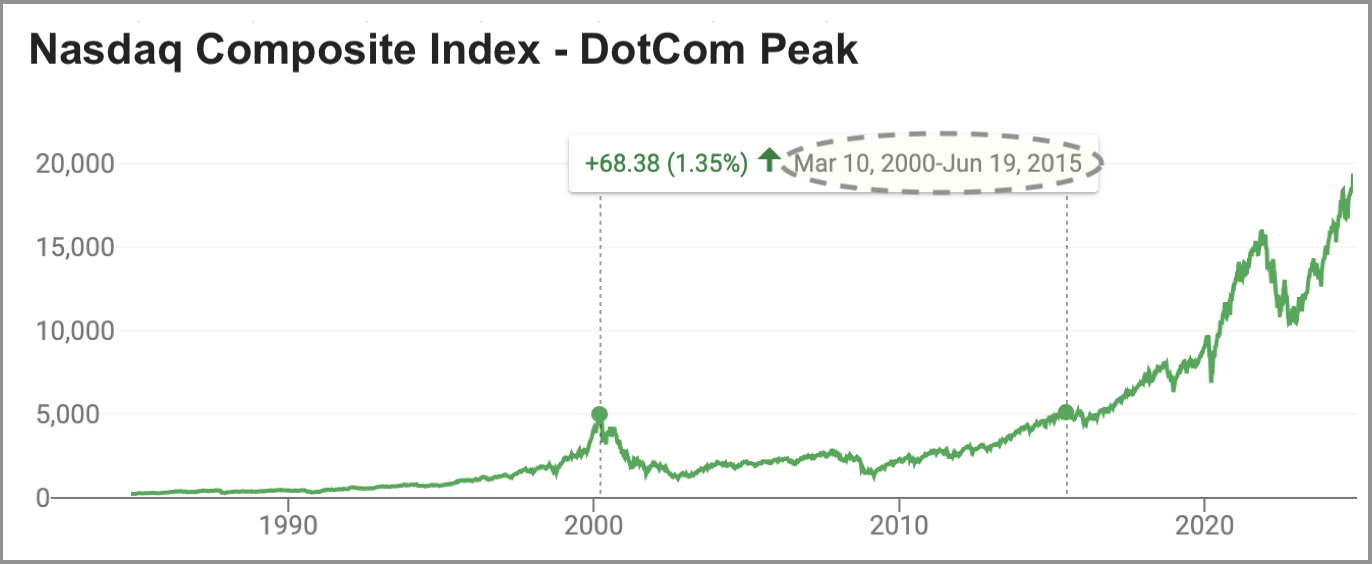

In March 2000, the global markets peaked right before the popping of the dot-com bubble, triggering a seemingly endless free fall of all major indexes.

Those indexes would lose over 70% of their value by the end of 2002.

In between the highs and lows, we witnessed the literal collapse of the global seat of economic power on September 11th of 2001.

|

|---|

| Source: Nasdaq Composite Index Historical Data |

In the skies, the Columbia Space Shuttle disintegrated on atmospheric reentry (February of 2003,) reliving the traumatic events of space shuttle Challenger’s explosion during liftoff in 1986.

We could not catch a break.

On the energy front, gas fracking and shale oil extraction were breaking - and shaking - new ground. Excess production and lower prices put significant pressure on deploying new reactors.

Incidentally, the entire planet shook in March of 2011 with the Tohoku earthquake and the tsunamic aftermath that engulfed the coast of Japan.

With Fukushima reactors still melting under receding ocean waters, weary German officials made the landmark decision to phase out Germany’s nuclear reactors, joined by Switzerland in a similar fashion.

Nuclear power generation would never again break its all-time high of 2006.

Back to the economy, the markets remained unable to generate real value for several years and resorted to fake valuations, inflating a real estate bubble that culminated with the 2007-2008 near destruction of the global economy.

On July 21st, 2011, Space Shuttle Atlantis landed at NASA’s Kennedy Space Center in Florida, marking the end of the Space Shuttle Program. With SpaceX’s finances and first orbital flights on shaky ground for most of the 2000s, space exploration turned into space research, constrained to resupply missions to the ISS.

On the technology front, with the launch of Facebook (2004), YouTube (2005), and the delivery of the first iPhones (in 2007), we had the historical equivalent of the invention of the press. While the physical presses created the large-scale ability to reproduce content for the masses, these technologies gave the masses the means to produce and distribute their own content.

The possibilities were still infinite, but we were more distracted than ever, and the cultural and industrial revolutions of the 1960s were finally over.

There were two extremely bright spots:

-

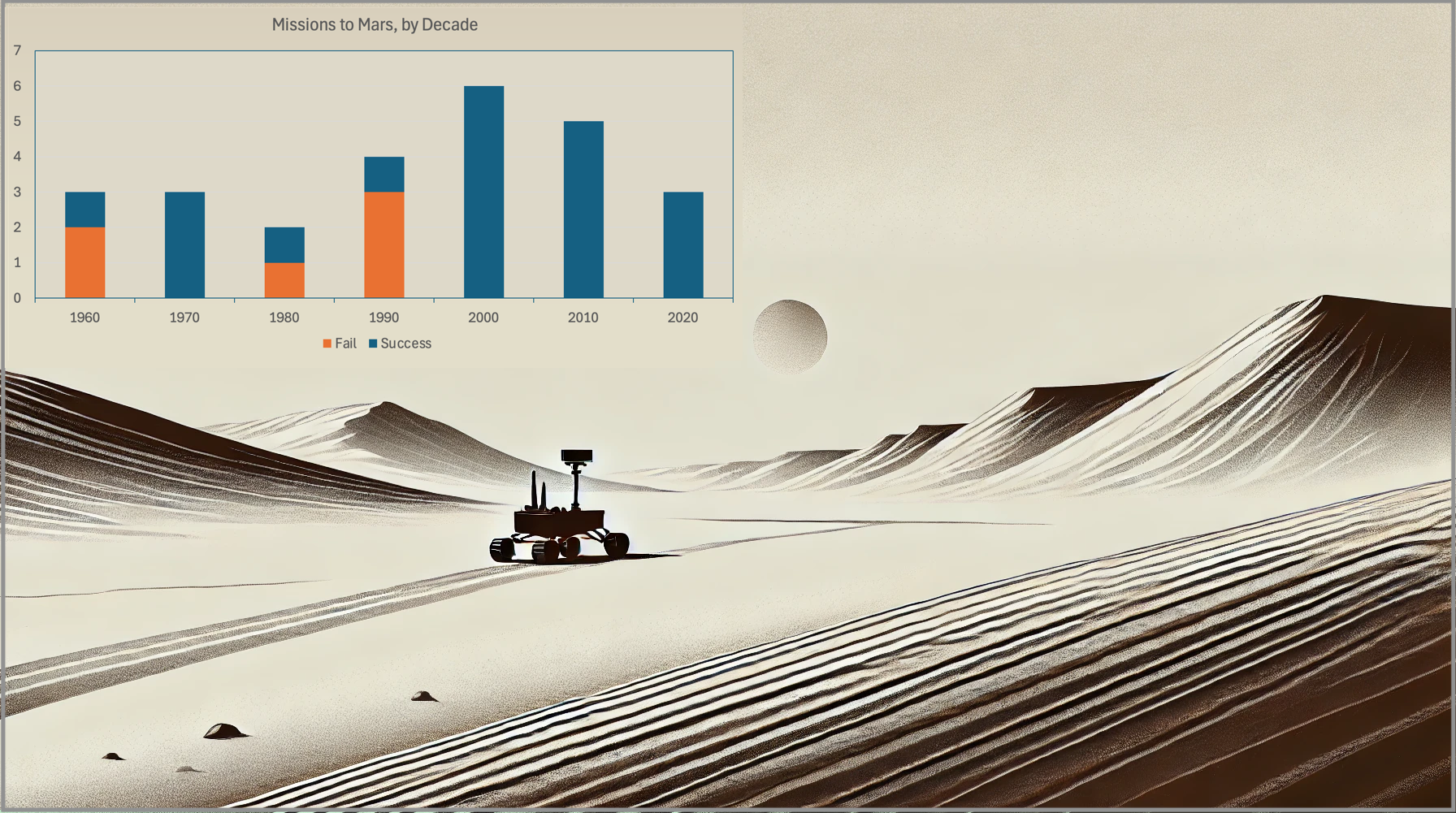

NASA solidified its shift from hovers to rovers, following Sojourner’s pathfinding mission to Mars with Spirit (January of 2004,) Opportunity (January of 2004,) and Curiosity (August of 2012.) India, EU, Russia, China, and UAE joined USA and the Soviet Union in successfully delivering orbiters and rovers to Mars orbit and soil.

-

Throughout the 2010s, several manufacturers released the first wave of commercially available Quantum computers. In time, these mind-bending machines are the only computing technology with the potential to rival the advent of microchips.

2012-2020 - IT’s Mission to the Clouds

I wrote this section as a separate chapter because we tech folks still had to make one final push in the long wake of the 1960s revolutionary winds.

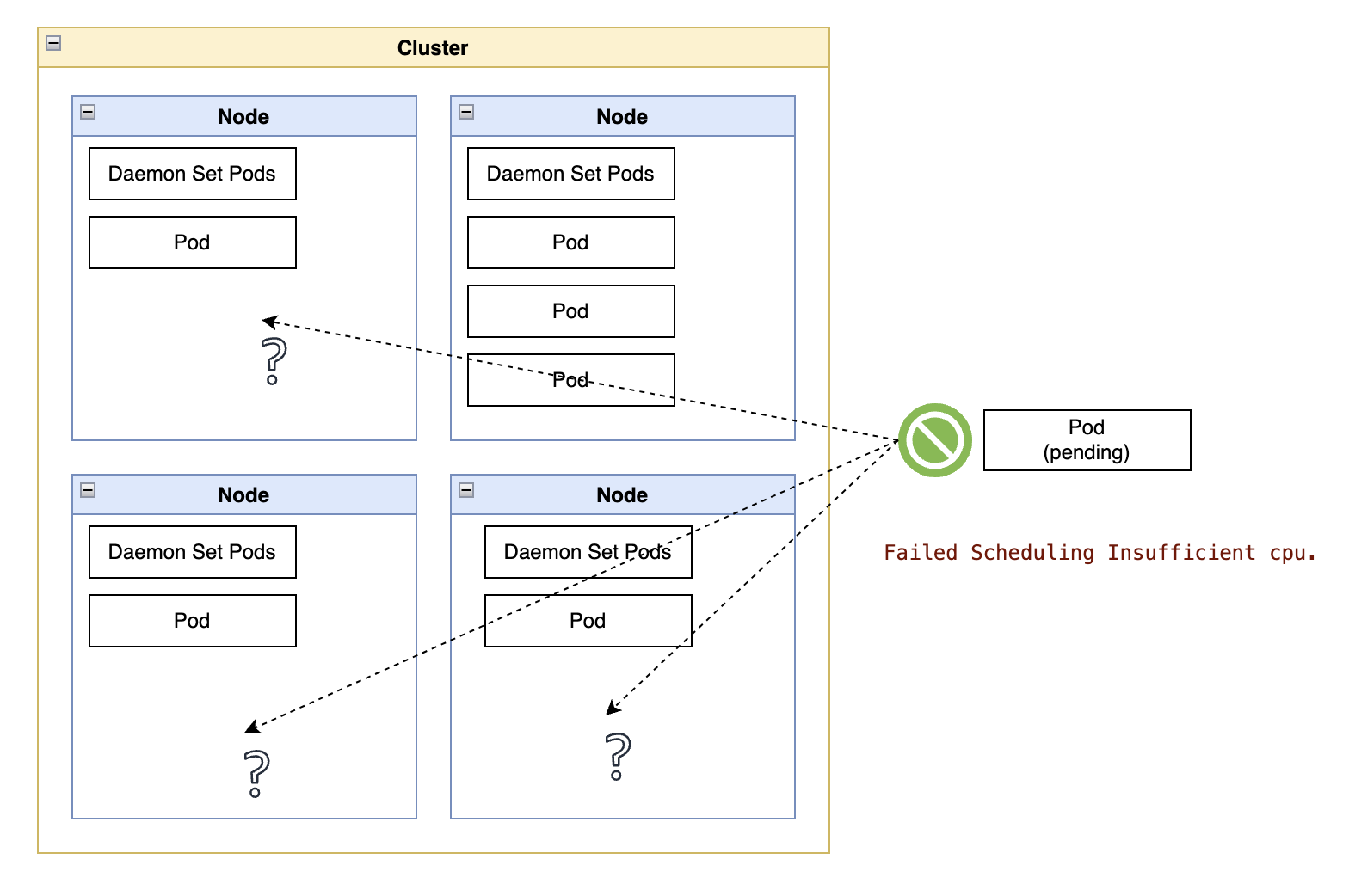

This cycle is a stark lesson in the economics of supply and demand, acting as a cautionary tale about why we are overestimating the demand for LLM-based technologies.

With space colonization and fossil fuel replacements sputtering in the early 2010s, computer technology was still going, with Moore’s Law in full effect.

By 2011, software was eating the world, but the hardware was crowding us out.

Thanks to a combination of RFID, ever-cheaper WiFi routers, and a slew of IoT devices, computers were everywhere.

Those computers everywhere became a bit of a problem because we ran out of two critical resources:

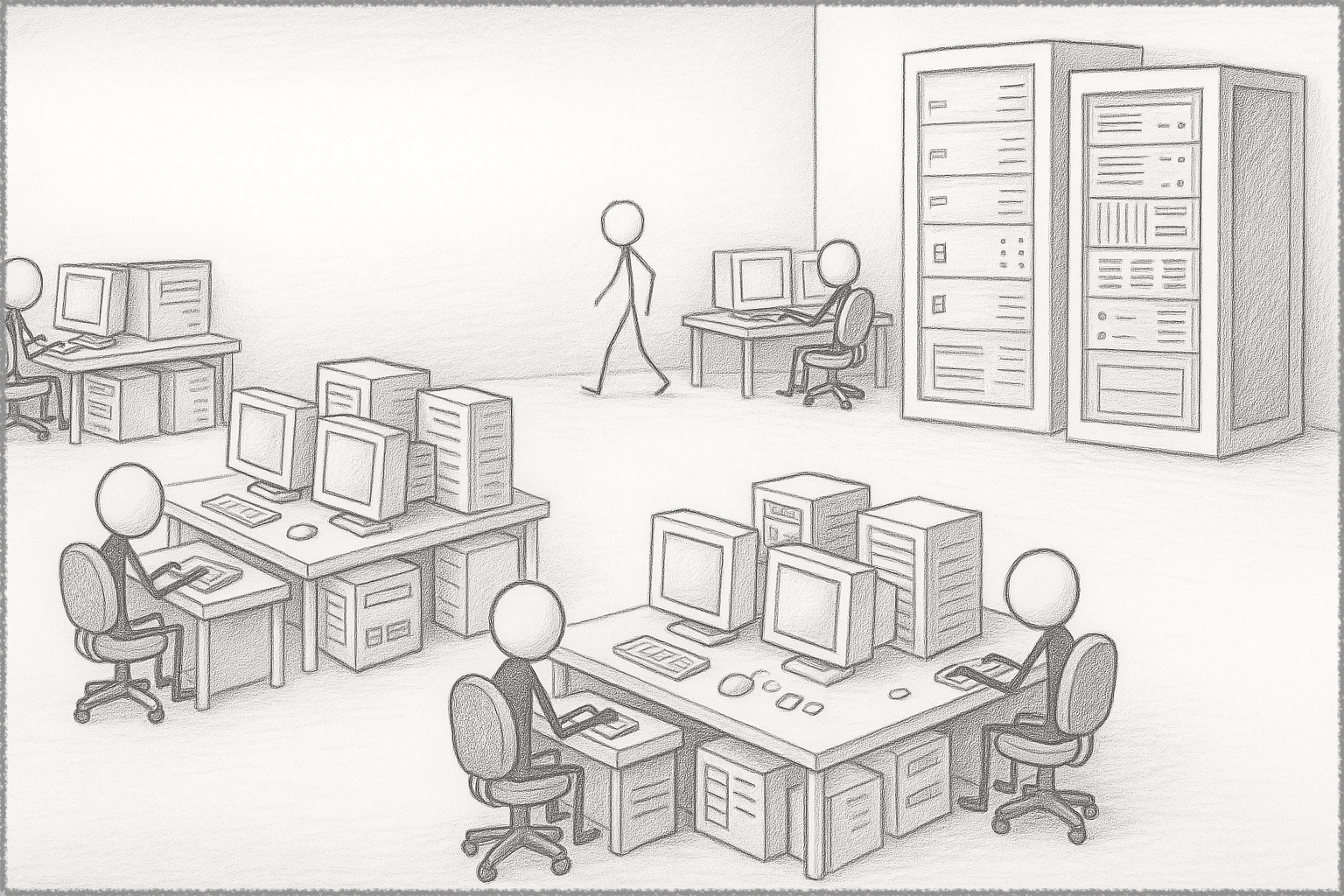

- Physical space. We were overrun by computer cabinets, racks, power supplies, and cables.

- People. We didn’t have enough people to manage those servers.

Every small office and shop had a fleet of small towers spread from front to back. Unsightly metal boxes were laid down in improvised corners, tethered to an entanglement of cables connecting desks and rows of shelves.

In software development, we created parallel hierarchies between seniority and hardware ownership. At the top of that hierarchy, senior developers held the questionable distinction of managing a computing sprawl of workstations, mid-range servers under their desks, and often, high-powered test servers hosted in a secured area in the building.

We had IT people running around with carts and cables, performing various forms of inventory, maintenance, and repairs.

Cloud computing started to take root at the center of the technology industry because it solved the first problem (physical space) and helped with the second one (people). Economies of scale and an imperative of market capture generated an industry of freemium service offerings backed by an unsustainable reliance on open-source quasi-products.

But even so, organizations began questioning the cost of running their operations and looking for ways to spend less on their cloud accounts, hardware, and personnel.

IT budgets went out of control, and jargon started shifting toward expressions like “business value,” “consolidation,” “time to market,” “mining existing data,” and many other terms indicating we had entered the “Town Planning” phase of the “Pioneer, Settler, Town Planning” cycle prescribed by Simon Wardley.

With limited growth caused by stiff competition and market cannibalization from hyperscalers, middleware providers accurately sensed the end of the decades-long market-capture cycle, prompting a series of defensive moves and monetization plays, from MongoDB in 2018 to Confluent’s Kafka in 2018 to Elastic in 2021. As more recent examples, we had HashiCorp’s Terraform in 2023, Redis Labs and, as a variation on the theme, WordPress’ fiasco with the WP Engine ban.

We held the keys to the virtual universe, but the possibilities were no longer infinite.

Society, industry, and governments started questioning the price tag, much like they questioned the escalating prices of exploring the physical universe.

LLM-based technologies, however useful, are still just one of the many moving parts in the same overextended business case for the virtual universe.

The LLM Ballast

With these historic arcs in the rearview mirror, we can revisit the claims of an LLM revolution around the corner.

Even if we can get to scientific-research levels of AGI and ASI - a reality-defying if - we have not been short of ideas.

Before LLMs, and by several measures from patent applications to research papers, the generation of new ideas has already reached renaissance levels of creativity and innovation. LLM advocates may point at AI’s ability to generate more novel-sounding paper titles, which only reinforces the point that LLMs are very good with words.

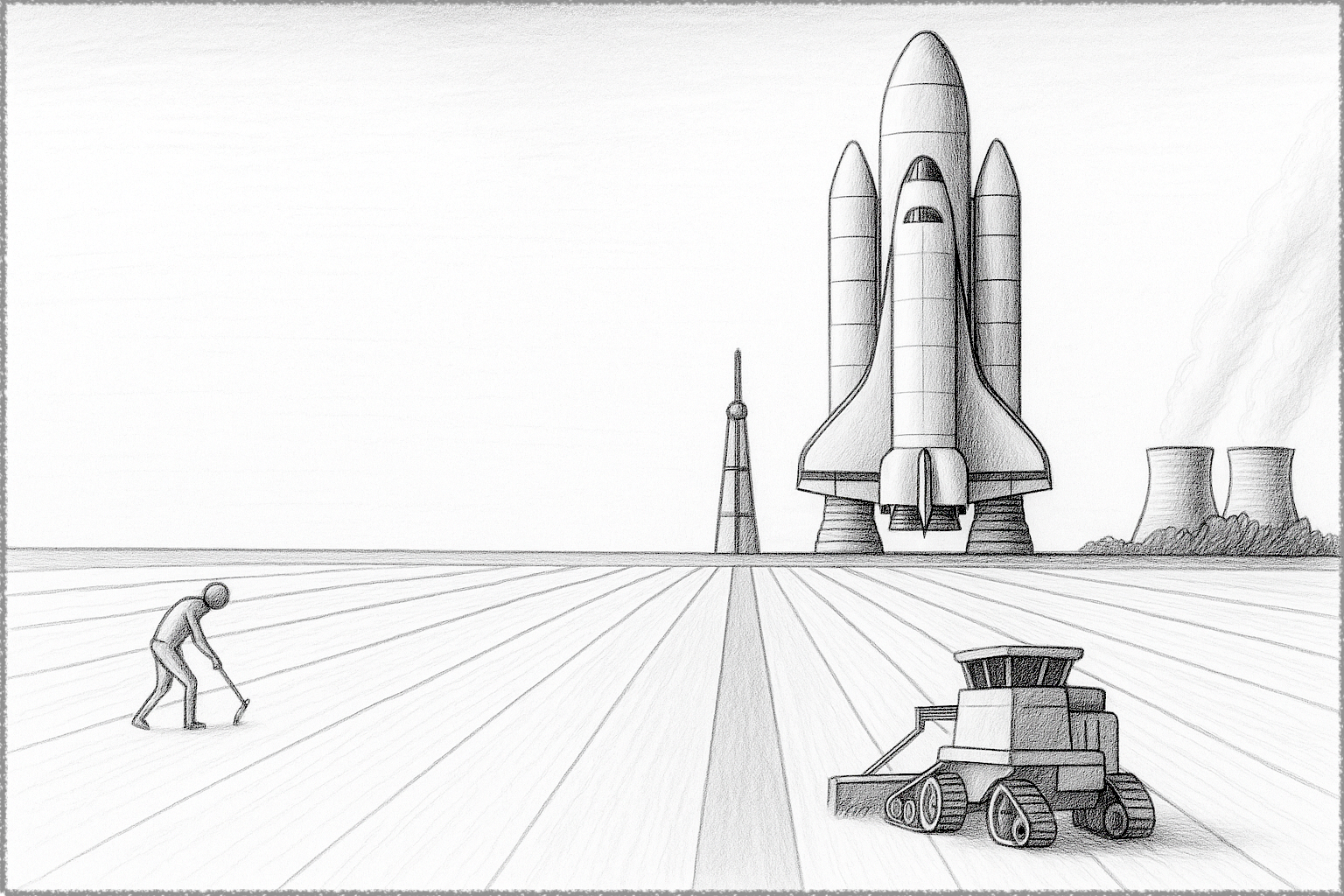

From an economic perspective, replicating the exponential gains of the third industrial revolution requires technologies that significantly advance the productive processes in the real world, which in turn requires new forms of production and new energy sources.

The tech industry, however, has been trying too hard to extend its reach with a self-referential model in which the physical world is subordinate to the virtual one, first, with NFTs, and now with LLMs.

And look, for all the doom and gloom of a nuclear-power industry that had seemingly lost the battle against renewable energy sources like solar and wind, the irony of a nuclear power renaissance driven by AI energy demand is not lost on me.

Setting aside the nuclear silver lining of our IT clouds, the economics of sending energy from the real world to build AI castles in the nether are terrible, backed only by the unsustainable math of market capture.

The Real World is the Real World

We may confuse our momentary fascination with electronic distractions for actual demand. However, the complex reality of our biological evolution remains a basic foundation of any economic and productive system.

Every systemic attempt to immerse humanity deeper in virtual worlds, from 3D TVs to simulations of the physical world and immersive entertainment, has quickly plateaued at relatively disappointing numbers.

Case in point, the market for VR googles is about 20% of the market for earphones. And no, it is not the price points.

In “The Artificial Empathy of Generative AI,” I wrote about generative AI hitting the same dissonant chord with humans. In hindsight, I missed an essential point about the value of human interaction going beyond language and into the expectation of being the subject of attention from other human beings.

While I love LLM technology and have found potential usages for it, the economic foundations of an LLM revolution are shakily deposited atop improbable reversals in the dynamics of energy consumption and human evolution.

More than generated words, revolutions need enthusiasm and creativity.

That is why I think the next industrial revolution will be one of significant developments in energy generation and materials engineering. And because Earth’s atmosphere may be ill-equipped to dissipate energy generation at those scales, an eventual colony on the surface of Mars or the Moon may be the catalyst we need.

We may be decades or even centuries away from such a future.

In fact, I hope it does take long, just like computers took decades to shrink from office-sized machinery to miniaturized marvels.

A prolonged revolution means the technological imperatives of creativity and sustainability required to support those colonies would impact multiple generations of builders and aspiring engineers.

With the observed excitement over SpaceX’s recent successes with crewed flights and reusable boosters, one can only imagine the planet-wide euphoria of witnessing the first humans setting foot on Mars.

Whether on Mars or the Moon, a terraforming program would transform industry, society, and culture. Massive feats of engineering streaking the skies, from planes to rockets, have always inspired our collective attention like nothing else.

Looking up from our phones, we may finally wake up from our slumber and stop being distracted by machines that can imitate our past.

Intelligence?

We already have a surplus.